I gave a quick presentation to the Fall RCOS group to provide a brief overview of my project. Here are the slides I presented:

Category: Bonsai Video

Bonsai Video provides a simple web interface to manage, organize, and distribute videos in a variety of file formats and distribution techniques.

Identifying Blank Thumbnails

When a video is imported or uploaded, the system automatically generates 3 thumbnails of the video (beginning, middle, end). A lot of videos begin with a sort of fade up from black, and someone pointed out during my presentation last Friday, it might be neat if there was a way to avoid adding empty (all black) thumbnails.

To accomplish this, I compare the generated thumbnail with a plain black image that I store using RMagick, a Ruby wrapper for ImageMagick. Here’s the snippet of code that makes it happen:

def black(args = nil)

require 'RMagick'

src_black = Magick::ImageList.new(RAILS_ROOT+'/public/images/black.jpg')

source = Magick::ImageList.new(args[:path])

black = src_black.scale(source.columns, source.rows)

if source.difference(black)[1] < 0.01

return true

else

return false

end

end

The difference command only works when the images are of equal size, which is why I have the intermediary resize step. The difference method returns an array of values, and I found the ‘normalized mean error’ to be a pretty good indicator. When comparing a black thumbnail with the black image that value was on the order of 1 E -5 and a regular thumbnail generated a number in the range of .50-.90… so I opted for the 0.01 threshold. That should be enough to test for all black images, but still allow fairly dark shots thumbnails to pass through the system.

Background Processing Changes

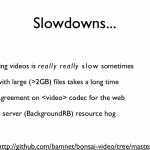

I spent most of my time this week trying to make things go faster without upgrading the old server things are running on. One of the bigger slowdowns I noticed was BackgrounDRb which wasn’t only taking up a hefty chunk of memory when running, but was also querying the database non-stop looking for new jobs in the queue.

I ended up switching out BackgrounDRb with Workling/Starling. While I lost the persistent job queue (something I’ll look into handling internally) the performance gains I’ve noticed are pretty significant. This change has been committed and pushed to github and I was looking forward to saying you can check out how I did it here but it looks like the change was too significant for GitHub to track. You can always download a local copy of the code and see what I changed if you’re interested.

It boiled down to installing working, starling, and some dependencies (like daemons). Converting the actual background scripts was pretty straightforward. I had to change the top of the classes to reference Workling instead of BackgrounDRb and strip out references to the persistent job queue. In the controllers/models that call the conversion I adjusted my function usage slightly (removed the hash wrapper I had on my arguments) and presto, things were working.

On another note, this week the Concerto team launched the Concerto public site at http://www.concerto-signage.com/. Its a pretty great looking site! I also spent some time working on the new version of Shuttle Tracking at RPI, which will be released under an Open Source license this year. I’ve slowly been re-writing the user portion of the application in Ruby on Rails and trying to optimize the code as much as I can for maximum performance. The javascript has also been completely reworked so we can use the faster Google Maps V3 API when they add functionality for polylines, or something that will allow us to draw the shuttle routes.

Update Presentation

I just finished putting together my second presentation for RCOS reviewing the status of my project. The slides I plan on presenting are included below for your review.

Additionally, I just committed the some the plug-in architecture used to playback videos. Right now I included code to play back some videos via Flowplayer (a flash based video player), Quicktime, “video for everyone” , and a simple <video> tag to play OGG videos on Firefox.

Interface Cleanup & Side work

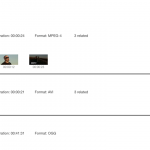

I spent lots of time last week working on cleaning up the main interface used to interact with video in my project. Most of those changes are reflected on github, and there are a few more I’d like to iron out early this week. I’ve uploaded a sample screenshot of the interface with a sample video I’m working on. I haven’t yet solved the problem regarding the background processing slowdown, but I’ve found that I can disable backgroundrb (./script/backgroundrb stop) when I’m quickly iterating through interface designs and pages load pretty quickly.

Most of my time is now going to be spent finishing up the outgoing parts of the project, components that facilitate the playback of video. I found this cool blog post talking about universal video playback in browsers using a wide-variety of playback elements degrading gracefully to the next one: http://camendesign.com/code/video_for_everybody.

Additionally I’ve spent some time working on Concerto, which is pretty popular open source project started at RPI run by the Web Tech Group. I remembered enough C# to write a Concerto screensaver for Windows, a neat addition to help show Concerto advertisements on individual laptops and computer screens. Right now the code exists in an alpha state and if you’re really interested you can check out the source code here: http://dev.studentsenate.rpi.edu/repositories/svn-senate/browser/extras/screensaver_win. Don’t worry if you’re a Mac user, we’ve recently updated the production server to the latest API version which produces RSS feeds that work by default in the “MobileMe and RSS” screen saver that comes with OS X. Just add a url like http://signage.rpi.edu/content/render/?select_id=1 to start drawing content from the Service & Community feed. Linux users… it looks like you are just out of luck right now; though I encourage you to write your own until we get around to it.

On the back end, I made some changes to Concerto in the hopes of speeding up performance. Image resizing & rendering was refactored a bit in an effort to streamline the process and I added memcache to speed up load times for browsers that can’t hold tons of images in their local cache.

BackgroundRB Slowdown

I finished transitioning the thumbnail generation to a background process, using the same approach I use for video conversion because it seems like a thumbnail is just a special conversion. You can check out the commit here if you’re interested.

Adding another BackgroundRB worker was easy to do, but I’m finding that its wreaking havoc on my server. The box I’m developing bonsai video on is a pretty lightweight box (it might be my lightest now that I think about it). I choose to do this to force me to make sure my code would work well on slower boxes. So far I haven’t had any major issues. FFMPEG seems to convert videos fairly quickly, and up until today everything seemed under control. Combined, the 3 BackgroundRB workers are using up somewhere between 25 – 40% of my system’s memory, as reported by top. I’m not sure why the processes are using so much when they are sitting their waiting for work, and I doubt the sql overhead of pooling for new jobs (I use the persistent job queue) is that overwhelming.

This has made regular site navigation terribly slow, but at least pages don’t time out like they previously did when generating thumbnails. I think I have 3 options to fix things:

- Refactor and combine the workers, so both videos and thumbnails are handled by the same worker. I suspect this might be able to save me 10-15% on my memory usage, but its not as clean as I’d like it to be.

- Replace BackgroundRB with something else that may be a bit faster or more efficient. It looks like people have experienced the same problems I’ve had, maybe just on a smaller scale since they have more powerful servers. I would rather avoid this approach, since it means more development time reworking things I’ve already done.

- Buy more RAM or upgrade the server. This one is tough for me, as I’d like this to be able to run on a fairly low-end machine, but I’ve found that Ruby on Rails has higher system requirements than something like PHP would. Additionally, this costs money.

For now, I’m just going to turn off the background workers so I can do some interface work.

Thumbnailing Adjustments

The current thumbnail generation code runs in the same process as the web request to generate the thumbnails. Its much easier to extract a thumbnail image in the same code that creates the thumbnail object, and it worked pretty fast for a lot of my smaller test files. Now that I’ve started to work with larger and longer files, I’ve found the thumbnail generation process (which is handled via this command ffmpeg -i file.avi -y -deinterlace -f image2 -ss 00:12:34 image.jpg) has been too slow to be acceptable. I imagine that as video codecs get more complicated to compress video and maintain quality, the method to extract a single frame in the middle of a stream gets harder.

To fix this I’m going to have to re-write the thumbnail generation to be handled like video conversion and run as a background job. It unfortunately won’t present users with their thumbnails as quickly as I’d like (no one like to see a page saying “In Progress…”) but it should fix the long load times and subsequent timeouts caused by thumbnail generation.

Conversion Job Pool

In my previous post I talked about some conceptual stuff I use to convert videos to various formats. Yesterday I finished up the implementation that allows for multiple video conversions to occur at the same, so let me walk you though some of the more technical details to the best of my understanding.

BackgrounDRb does all the hard work, and can handle the idea of a job pool. What happens is this: there is one worker that is started by default. Instead of using this worker to handle the video conversion, I use it as the queue manager. My conversion controller calls something like this to send a new conversion request to the queue:

MiddleMan.worker(:video_worker).enq_queue_convert(:args => {:conversion_id => @conversion.id}, :job_key => @conversion.id)

In my video worker, I have two methods one for the queue_convert calls, and one that actually handles the convert.

#Queue up the video for conversion

def queue_convert(args)

conversion = Conversion.find(args[:conversion_id])

conversion.update_attributes({:status => “queued”})

thread_pool.defer(:convert,args)

end#Run the conversion of the video

def convert(args = nil)

logger.info(“Calling convert method for conversion #{args[:conversion_id]}.”)

conversion = Conversion.find(args[:conversion_id])

#do stuff

#Mark this job as complete in the job queue

persistent_job.finish!

end

My understanding is that thread_pool.defer method puts the job into a persistent queue, such that if everything crashes before the jobs starts it can still recover. If the maximum number of workers hasn’t been reached a new one is spawned to handle the request. For my uses, it would be nice if I could write a bit more code to choose when a new worker spawns. Free memory and processor usage are much more important than total number of procs when it comes to video conversion. Time permitting, I might dive into BackgrounDRb and see how easy it would be to change that around a bit. In the convert method, the only important line is at the end, persistent_job.finish! which marks the job as complete. I suspect this only frees the worked to look for another job or shut down, during my tests a job that crashes halfway through its run (lets say FFMPEG Seg Faulted in the middle of my convert code) is not automatically retried when BackgrounDRb is restarted.

Since converting videos can take a really long time, I had a conversion model to track that status of things of a video that being processed by the video worker. I think I could have implemented something with BackgrounDRb’s result cache, but it struck me as not the most persistent way to track details about a conversion.

If you’re looking for the actual code that *works* for me, you can check it out on GitHub: http://github.com/bamnet/bonsai-video/tree/master

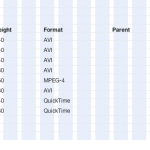

Converting Video

I spent this week working on tools to convert videos to different formats. My main goal was allow people to specify ffmpeg conversion settings that could be used to render something into a “web-friendly” format like flv, h264, or even ogg. To do this, I have a profile model that stores a command (string) with infile and outfile dummy parameters. You select a video you want to convert and choose the conversion profile, and send it off into the sunset.

Right now the “sunset” consists of a BackgrounDRb worker. I found it pretty challenging to debug when I was working on it, BackgrounDRb gives you a very limited trace of the error, and it never pinpointed what line in my video worker was making it unhappy. When things work well, the video conversion worker does a great job. Videos convert, they’re created in the system and associated with the parent. No problems at all. The trick comes into play when videos conversion fails. Right now I don’t have any way to tell if ffmpeg is having a good time or a bad time converting which would be a really handy feature. Ideally, I’d be able to grab the last line or two from the FFMPEG output that show the status, fps, etc. I might look into this with more time.

Additionally, I’m working on some code to support more than one worker running at the same time. Right now I spawn 1 video worker, which queues up all the requests to convert video… ideally I think I’d like to enable users to define how many conversions go on at the same time so faster machines could handle more conversion processes.

Importing, Uploading, Thumbnailing

Last week was a busy week for me on the programming end of things… sorry about the lack of a blog post!

I decided to temporarily halt work on the video “collection” concept (grouping similiar videos together isn’t as important as the videos themselves!) and focus back on the workflow for uploading a video. I’m happy to say things looks and flow much better now. Let me walk you though what happens.

- Visit the Add Video page

- Give the video a title

- Choose to Upload a file or Import a file on the server. Importing works best for really large files, where it might make sense to ftp or scp them over manually before bringing them into the software.

- I use Mediainfo to read some metadata about the video file, like duration, codecs, etc and store those back on the Video model.

- Then, I generate 3 thumbnails at the beginning, middle and end of the video. They are also stored in 2 smaller versions (like thumbnail, and preview).

- Presto! Upload complete.

The thumbnail functionality is finished, such that you can manually specific a timecode anywhere in the video to generate a new thumbnail.

I have run into a few bumps with really high quality files, the thumbnail generation takes longer than the web-browser is willing to wait so the browser times out. The process continues on in the background, but its still not the cleanest implementation.

Next on my list of things to work on is video conversion.

Oh yea, here are some screenshots of the current interface: